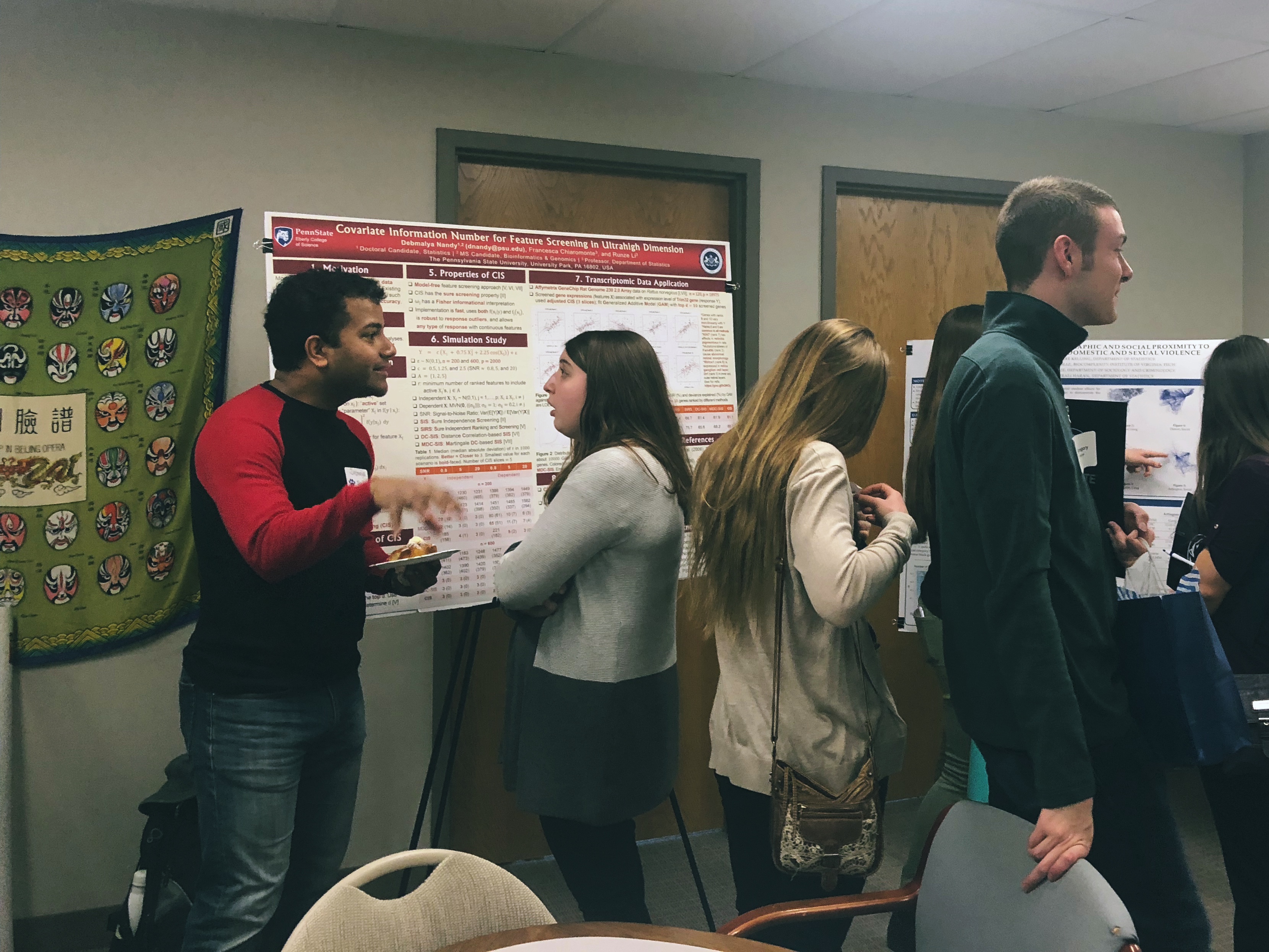

Five years into a statistics program and I’m still shocked at my decision to go down this path. Switching fields going into my PhD was daunting and I’ve spoken before about how was convinced to turn Full Stats Stud during my visit to the programs recruitment day. Since then I’ve helped out with each batch of prospective students as they too are welcomed to Happy Valley and introduced to what earning a PhD in Statistics here could mean for them. I want to share these experiences and a little bit of what’s going on behind the curtain for our readers. If you are be going through a similar process, here’s one look at what a recruitment day looks like and what to expect. We’d also love to hear from others about their experiences as this process varies GREATLY between departments and universities!

In PSU Statistics, we invite domestic students that already have offers to our PhD program to come to campus for a day of information and fun. These students already have been accepted and we hope to convince them that they’ll find a great environment for spending the next 4-6+ years. Not all recruitment events happen after offers go out, and that could make for a more stressful visit. Even visiting a department you do have an offer for can be extremely intimidating. I know I am STILL intimidated sometimes! But one of the most important tips I have is to remember that you have worked hard and earned a spot! They should be working hard to impress you and be showcasing what their program has to offer.

Continue reading “PSU Stats Recruitment Day”